Data Access

Personal data in the hands of AI can be detrimental or incredibly useful to us. We have the chance to decide if we act in time.

As the field of Artificial Intelligence continuous to develop and grow at an exponential rate, we are closer than ever before to achieving what has always been feared by many AI experts, researchers, IT Engineers, and computer scientists, and that is Artificial General Intelligence or human-level intelligence AI. Although the issue remains an open debate for AI experts as to whether AGI will benefit or harm humanity in the far future, the AI Control Problem, also known as the AI Safety Solution Problem, has turned from a dramatic topic into a research field which has been lately gaining more attention from experts in the technology sector. The AI Control Problem concerns about the possibility of AI surpassing human intelligence, therefore outperforming us in all aspects of life to a point where we might become dominated by superintelligent AI machines, and the biggest issue to this is that there is no “STOP” button or an option to return back to ANI, or Artificial Narrow Intelligence, which is the single-tasked AI which exists today (not dangerous). As superintelligent machines self-improve, we might not be able to keep track of how intelligent this AI is becoming which can result in a lack of understanding of the possible threatening outcomes. The AI Control Problem is a huge and sophisticated topic, yet, we have addressed and tackled the problem from 6 different angles within the field of AI, as well as how big of a role each topic plays in the AI Control Problem:

Personal data in the hands of AI can be detrimental or incredibly useful to us. We have the chance to decide if we act in time.

As artificial intelligence moves out of the data science labs and into the real world biased AI systems are likely to become an increasingly widespread problem, how does that affect us?

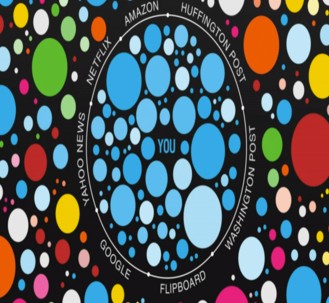

A filter bubble is an algorithmic bias that skews or limits the information an individual user sees on the internet.

Giving an intelligent computer the capability of seeing and understanding images can be more dangerous than benefitial to humanity.

In a world full of false information users are losing their sense and reason owing to the deterioration of information ambiguity, what can we do about it?

Combining AI technology with humans can prove immensely useful, but is it worth the risk?